Neural directional encoding for efficient and accurate view-dependent appearance modeling

Abstract

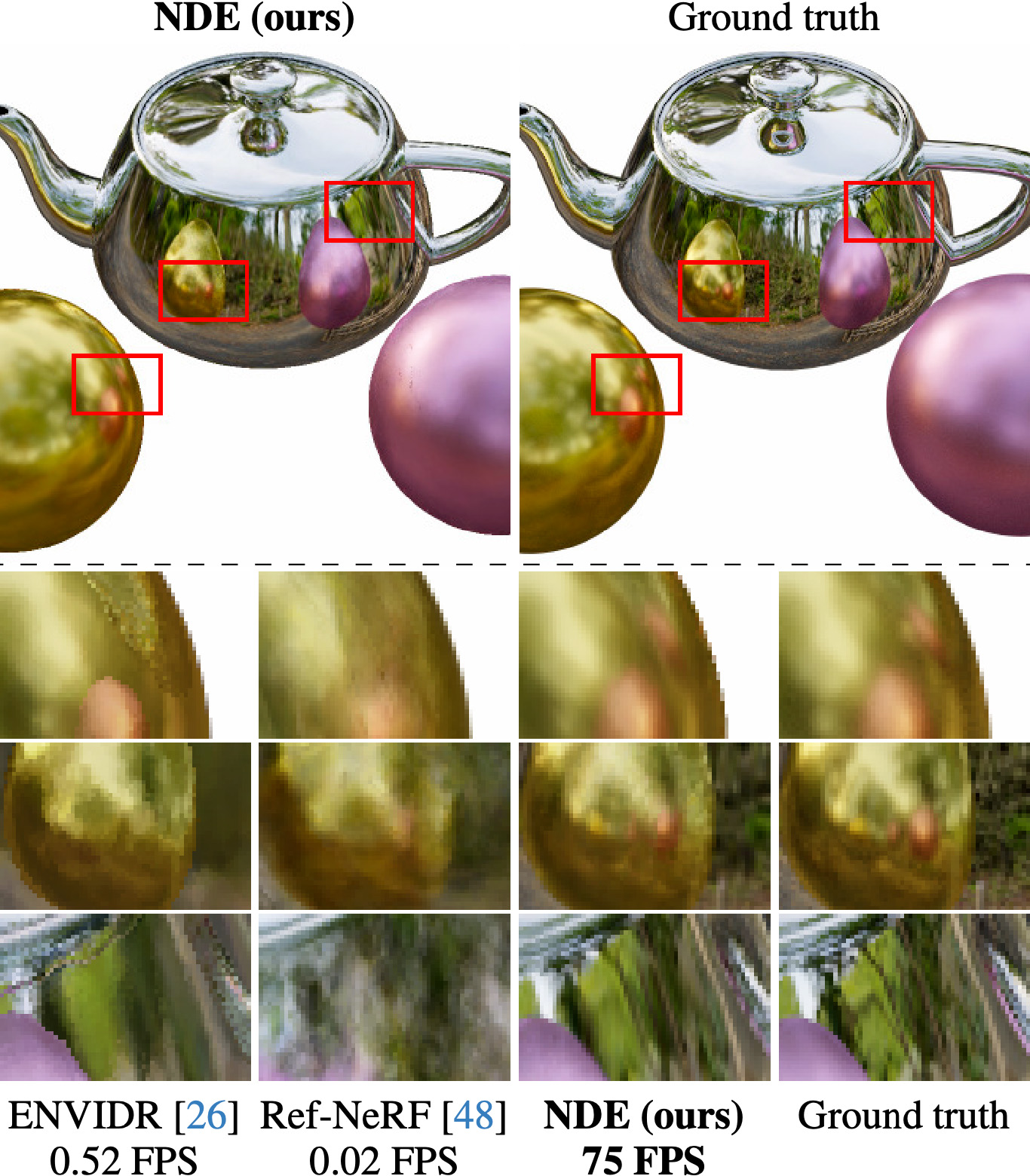

Novel-view synthesis of specular objects like shiny metals or glossy paints remains a significant challenge. Not only the glossy appearance but also global illumination effects, including reflections of other objects in the environment, are critical components to faithfully reproduce a scene. In this paper, we present Neural Directional Encoding (NDE), a view-dependent appearance encoding of neural radiance fields (NeRF) for rendering specular objects. NDE transfers the concept of feature-grid-based spatial encoding to the angular domain, significantly improving the ability to model high-frequency angular signals. In contrast to previous methods that use encoding functions with only angular input, we additionally cone-trace spatial features to obtain a spatially varying directional encoding, which addresses the challenging interreflection effects. Extensive experiments on both synthetic and real datasets show that a NeRF model with NDE (1) outperforms the state of the art on view synthesis of specular objects, and (2) works with small networks to allow fast (real-time) inference.

Downloads and links

- paper (PDF, 3.7 MB)

- supplemental document (PDF, 1.4 MB)

- supplemental video (MP4, 44 MB)

- project page

- code – reference implementation

- citation (BIB)

Media

Supplemental video

BibTeX reference

@inproceedings{Wu:2024:NDE,

author = {Liwen Wu and Sai Bi and Zexiang Xu and Fujun Luan and Kai Zhang and Iliyan Georgiev and Kalyan Sunkavalli and Ravi Ramamoorthi},

title = {Neural directional encoding for efficient and accurate view-dependent appearance modeling},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR) 2024},

year = {2024}

}